{

"cells": [

{

"cell_type": "markdown",

"id": "43278325",

"metadata": {},

"source": [

"\n",

"<a id='wald-friedman'></a>\n",

"<div id=\"qe-notebook-header\" align=\"right\" style=\"text-align:right;\">\n",

" <a href=\"https://quantecon.org/\" title=\"quantecon.org\">\n",

" <img style=\"width:250px;display:inline;\" width=\"250px\" src=\"https://assets.quantecon.org/img/qe-menubar-logo.svg\" alt=\"QuantEcon\">\n",

" </a>\n",

"</div>"

]

},

{

"cell_type": "markdown",

"id": "50c0a3df",

"metadata": {},

"source": [

"# A Problem that Stumped Milton Friedman\n",

"\n",

"(and that Abraham Wald solved by inventing sequential analysis)\n",

"\n",

"\n",

"<a id='index-1'></a>"

]

},

{

"cell_type": "markdown",

"id": "a106f940",

"metadata": {},

"source": [

"## Contents\n",

"\n",

"- [A Problem that Stumped Milton Friedman](#A-Problem-that-Stumped-Milton-Friedman) \n",

" - [Overview](#Overview) \n",

" - [Source of the Problem](#Source-of-the-Problem) \n",

" - [Neyman-Pearson Formulation](#Neyman-Pearson-Formulation) \n",

" - [Wald’s Sequential Formulation](#Wald’s-Sequential-Formulation) \n",

" - [Links Between $ A,B $ and $ \\alpha, \\beta $](#Links-Between-$-A,B-$-and-$-\\alpha,-\\beta-$) \n",

" - [Simulations](#Simulations) \n",

" - [Related Lectures](#Related-Lectures) "

]

},

{

"cell_type": "markdown",

"id": "3e3ce66d",

"metadata": {},

"source": [

"## Overview\n",

"\n",

"This is the first of two lectures about a statistical decision problem that a US Navy Captain presented to Milton\n",

"Friedman and W. Allen Wallis during World War II when they were analysts at the U.S. Government’s Statistical Research Group at Columbia University.\n",

"\n",

"This problem led Abraham Wald [[Wald, 1947](https://python.quantecon.org/zreferences.html#id113)] to formulate **sequential analysis**,\n",

"an approach to statistical decision problems that is intimately related to dynamic programming.\n",

"\n",

"In the spirit of [this lecture](https://python.quantecon.org/prob_meaning.html), the present lecture and its [sequel](https://python.quantecon.org/wald_friedman_2.html) approach the problem from two distinct points of view.\n",

"\n",

"In this lecture, we describe Wald’s formulation of the problem from the perspective of a statistician\n",

"working within the Neyman-Pearson tradition of a frequentist statistician who thinks about testing hypotheses and consequently use laws of large numbers to investigate limiting properties of particular statistics under a given **hypothesis**, i.e., a vector of **parameters** that pins down a particular member of a manifold of statistical models that interest the statistician.\n",

"\n",

"- From [this lecture](https://python.quantecon.org/prob_meaning.html), please remember that a frequentist statistician routinely calculates functions of sequences of random variables, conditioning on a vector of parameters. \n",

"\n",

"\n",

"In [this sequel](https://python.quantecon.org/wald_friedman_2.html) we’ll discuss another formulation that adopts the perspective of a **Bayesian statistician** who views\n",

"parameter vectors as vectors of random variables that are jointly distributed with observable variables that he is concerned about.\n",

"\n",

"Because we are taking a frequentist perspective that is concerned about relative frequencies conditioned on alternative parameter values, i.e.,\n",

"alternative **hypotheses**, key ideas in this lecture\n",

"\n",

"- Type I and type II statistical errors \n",

" - a type I error occurs when you reject a null hypothesis that is true \n",

" - a type II error occures when you accept a null hypothesis that is false \n",

"- Abraham Wald’s **sequential probability ratio test** \n",

"- The **power** of a frequentist statistical test \n",

"- The **size** of a frequentist statistical test \n",

"- The **critical region** of a statistical test \n",

"- A **uniformly most powerful test** \n",

"- The role of a Law of Large Numbers (LLN) in interpreting **power** and **size** of a frequentist statistical test \n",

"\n",

"\n",

"We’ll begin with some imports:"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "5b620aa2",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"import numpy as np\n",

"import matplotlib.pyplot as plt\n",

"from numba import njit, prange\n",

"from numba.experimental import jitclass\n",

"from math import gamma\n",

"from scipy.integrate import quad\n",

"from scipy.stats import beta\n",

"from collections import namedtuple\n",

"import pandas as pd"

]

},

{

"cell_type": "markdown",

"id": "76965554",

"metadata": {},

"source": [

"This lecture uses ideas studied in [this lecture](https://python.quantecon.org/likelihood_ratio_process.html) and [this lecture](https://python.quantecon.org/likelihood_bayes.html)."

]

},

{

"cell_type": "markdown",

"id": "3e7ca193",

"metadata": {},

"source": [

"## Source of the Problem\n",

"\n",

"On pages 137-139 of his 1998 book *Two Lucky People* with Rose Friedman [[Friedman and Friedman, 1998](https://python.quantecon.org/zreferences.html#id108)],\n",

"Milton Friedman described a problem presented to him and Allen Wallis\n",

"during World War II, when they worked at the US Government’s\n",

"Statistical Research Group at Columbia University.\n",

"\n",

">**Note**\n",

">\n",

">See pages 25 and 26 of Allen Wallis’s 1980 article [[Wallis, 1980](https://python.quantecon.org/zreferences.html#id5)] about the Statistical Research Group at Columbia University during World War II for his account of the episode and for important contributions that Harold Hotelling made to formulating the problem. Also see chapter 5 of Jennifer Burns book about\n",

"Milton Friedman [[Burns, 2023](https://python.quantecon.org/zreferences.html#id6)].\n",

"\n",

"Let’s listen to Milton Friedman tell us what happened\n",

"\n",

"> In order to understand the story, it is necessary to have an idea of a\n",

"simple statistical problem, and of the standard procedure for dealing\n",

"with it. The actual problem out of which sequential analysis grew will\n",

"serve. The Navy has two alternative designs (say A and B) for a\n",

"projectile. It wants to determine which is superior. To do so it\n",

"undertakes a series of paired firings. On each round, it assigns the\n",

"value 1 or 0 to A accordingly as its performance is superior or inferior\n",

"to that of B and conversely 0 or 1 to B. The Navy asks the statistician\n",

"how to conduct the test and how to analyze the results.\n",

"\n",

"\n",

"> The standard statistical answer was to specify a number of firings (say\n",

"1,000) and a pair of percentages (e.g., 53% and 47%) and tell the client\n",

"that if A receives a 1 in more than 53% of the firings, it can be\n",

"regarded as superior; if it receives a 1 in fewer than 47%, B can be\n",

"regarded as superior; if the percentage is between 47% and 53%, neither\n",

"can be so regarded.\n",

"\n",

"\n",

"> When Allen Wallis was discussing such a problem with (Navy) Captain\n",

"Garret L. Schyler, the captain objected that such a test, to quote from\n",

"Allen’s account, may prove wasteful. If a wise and seasoned ordnance\n",

"officer like Schyler were on the premises, he would see after the first\n",

"few thousand or even few hundred [rounds] that the experiment need not\n",

"be completed either because the new method is obviously inferior or\n",

"because it is obviously superior beyond what was hoped for\n",

"$ \\ldots $.\n",

"\n",

"\n",

"Friedman and Wallis worked on the problem but, after realizing that\n",

"they were not able to solve it, they described the problem to Abraham Wald.\n",

"\n",

"That started Wald on the path that led him to *Sequential Analysis* [[Wald, 1947](https://python.quantecon.org/zreferences.html#id113)]."

]

},

{

"cell_type": "markdown",

"id": "542a430e",

"metadata": {},

"source": [

"## Neyman-Pearson Formulation\n",

"\n",

"It is useful to begin by describing the theory underlying the test\n",

"that Navy Captain G. S. Schuyler had been told to use and that led him\n",

"to approach Milton Friedman and Allan Wallis to convey his conjecture\n",

"that superior practical procedures existed.\n",

"\n",

"Evidently, the Navy had told Captain Schuyler to use what was then a state-of-the-art\n",

"Neyman-Pearson hypothesis test.\n",

"\n",

"We’ll rely on Abraham Wald’s [[Wald, 1947](https://python.quantecon.org/zreferences.html#id113)] elegant summary of Neyman-Pearson theory.\n",

"\n",

"Watch for these features of the setup:\n",

"\n",

"- the assumption of a *fixed* sample size $ n $ \n",

"- the application of laws of large numbers, conditioned on alternative\n",

" probability models, to interpret probabilities $ \\alpha $ and\n",

" $ \\beta $ of the type I and type II errors defined in the Neyman-Pearson theory \n",

"\n",

"\n",

"In chapter 1 of **Sequential Analysis** [[Wald, 1947](https://python.quantecon.org/zreferences.html#id113)] Abraham Wald summarizes the\n",

"Neyman-Pearson approach to hypothesis testing.\n",

"\n",

"Wald frames the problem as making a decision about a probability\n",

"distribution that is partially known.\n",

"\n",

"(You have to assume that *something* is already known in order to state a well-posed\n",

"problem – usually, *something* means *a lot*)\n",

"\n",

"By limiting what is unknown, Wald uses the following simple structure\n",

"to illustrate the main ideas:\n",

"\n",

"- A decision-maker wants to decide which of two distributions\n",

" $ f_0 $, $ f_1 $ govern an IID random variable $ z $. \n",

"- The null hypothesis $ H_0 $ is the statement that $ f_0 $\n",

" governs the data. \n",

"- The alternative hypothesis $ H_1 $ is the statement that\n",

" $ f_1 $ governs the data. \n",

"- The problem is to devise and analyze a test of hypothesis\n",

" $ H_0 $ against the alternative hypothesis $ H_1 $ on the\n",

" basis of a sample of a fixed number $ n $ independent\n",

" observations $ z_1, z_2, \\ldots, z_n $ of the random variable\n",

" $ z $. \n",

"\n",

"\n",

"To quote Abraham Wald,\n",

"\n",

"> A test procedure leading to the acceptance or rejection of the [null]\n",

"hypothesis in question is simply a rule specifying, for each possible\n",

"sample of size $ n $, whether the [null] hypothesis should be accepted\n",

"or rejected on the basis of the sample. This may also be expressed as\n",

"follows: A test procedure is simply a subdivision of the totality of\n",

"all possible samples of size $ n $ into two mutually exclusive\n",

"parts, say part 1 and part 2, together with the application of the\n",

"rule that the [null] hypothesis be accepted if the observed sample is\n",

"contained in part 2. Part 1 is also called the critical region. Since\n",

"part 2 is the totality of all samples of size $ n $ which are not\n",

"included in part 1, part 2 is uniquely determined by part 1. Thus,\n",

"choosing a test procedure is equivalent to determining a critical\n",

"region.\n",

"\n",

"\n",

"Let’s listen to Wald longer:\n",

"\n",

"> As a basis for choosing among critical regions the following\n",

"considerations have been advanced by Neyman and Pearson: In accepting\n",

"or rejecting $ H_0 $ we may commit errors of two kinds. We commit\n",

"an error of the first kind if we reject $ H_0 $ when it is true;\n",

"we commit an error of the second kind if we accept $ H_0 $ when\n",

"$ H_1 $ is true. After a particular critical region $ W $ has\n",

"been chosen, the probability of committing an error of the first\n",

"kind, as well as the probability of committing an error of the second\n",

"kind is uniquely determined. The probability of committing an error\n",

"of the first kind is equal to the probability, determined by the\n",

"assumption that $ H_0 $ is true, that the observed sample will be\n",

"included in the critical region $ W $. The probability of\n",

"committing an error of the second kind is equal to the probability,\n",

"determined on the assumption that $ H_1 $ is true, that the\n",

"probability will fall outside the critical region $ W $. For any\n",

"given critical region $ W $ we shall denote the probability of an\n",

"error of the first kind by $ \\alpha $ and the probability of an\n",

"error of the second kind by $ \\beta $.\n",

"\n",

"\n",

"Let’s listen carefully to how Wald applies law of large numbers to\n",

"interpret $ \\alpha $ and $ \\beta $:\n",

"\n",

"> The probabilities $ \\alpha $ and $ \\beta $ have the\n",

"following important practical interpretation: Suppose that we draw a\n",

"large number of samples of size $ n $. Let $ M $ be the\n",

"number of such samples drawn. Suppose that for each of these\n",

"$ M $ samples we reject $ H_0 $ if the sample is included in\n",

"$ W $ and accept $ H_0 $ if the sample lies outside\n",

"$ W $. In this way we make $ M $ statements of rejection or\n",

"acceptance. Some of these statements will in general be wrong. If\n",

"$ H_0 $ is true and if $ M $ is large, the probability is\n",

"nearly $ 1 $ (i.e., it is practically certain) that the\n",

"proportion of wrong statements (i.e., the number of wrong statements\n",

"divided by $ M $) will be approximately $ \\alpha $. If\n",

"$ H_1 $ is true, the probability is nearly $ 1 $ that the\n",

"proportion of wrong statements will be approximately $ \\beta $.\n",

"Thus, we can say that in the long run [ here Wald applies law of\n",

"large numbers by driving $ M \\rightarrow \\infty $ (our comment,\n",

"not Wald’s) ] the proportion of wrong statements will be\n",

"$ \\alpha $ if $ H_0 $is true and $ \\beta $ if\n",

"$ H_1 $ is true.\n",

"\n",

"\n",

"The quantity $ \\alpha $ is called the *size* of the critical region,\n",

"and the quantity $ 1-\\beta $ is called the *power* of the critical\n",

"region.\n",

"\n",

"Wald notes that\n",

"\n",

"> one critical region $ W $ is more desirable than another if it\n",

"has smaller values of $ \\alpha $ and $ \\beta $. Although\n",

"either $ \\alpha $ or $ \\beta $ can be made arbitrarily small\n",

"by a proper choice of the critical region $ W $, it is possible\n",

"to make both $ \\alpha $ and $ \\beta $ arbitrarily small for a\n",

"fixed value of $ n $, i.e., a fixed sample size.\n",

"\n",

"\n",

"Wald summarizes Neyman and Pearson’s setup as follows:\n",

"\n",

"> Neyman and Pearson show that a region consisting of all samples\n",

"$ (z_1, z_2, \\ldots, z_n) $ which satisfy the inequality\n",

"\n",

"$$\n",

"\\frac{ f_1(z_1) \\cdots f_1(z_n)}{f_0(z_1) \\cdots f_0(z_n)} \\geq k\n",

"$$\n",

"\n",

"is a most powerful critical region for testing the hypothesis\n",

"$ H_0 $ against the alternative hypothesis $ H_1 $. The term\n",

"$ k $ on the right side is a constant chosen so that the region\n",

"will have the required size $ \\alpha $.\n",

"\n",

"\n",

"Wald goes on to discuss Neyman and Pearson’s concept of *uniformly most\n",

"powerful* test.\n",

"\n",

"Here is how Wald introduces the notion of a sequential test\n",

"\n",

"> A rule is given for making one of the following three decisions at any stage of\n",

"the experiment (at the m th trial for each integral value of m ): (1) to\n",

"accept the hypothesis H , (2) to reject the hypothesis H , (3) to\n",

"continue the experiment by making an additional observation. Thus, such\n",

"a test procedure is carried out sequentially. On the basis of the first\n",

"observation, one of the aforementioned decision is made. If the first or\n",

"second decision is made, the process is terminated. If the third\n",

"decision is made, a second trial is performed. Again, on the basis of\n",

"the first two observations, one of the three decision is made. If the\n",

"third decision is made, a third trial is performed, and so on. The\n",

"process is continued until either the first or the second decisions is\n",

"made. The number n of observations required by such a test procedure is\n",

"a random variable, since the value of n depends on the outcome of the\n",

"observations."

]

},

{

"cell_type": "markdown",

"id": "f1bdbeec",

"metadata": {},

"source": [

"## Wald’s Sequential Formulation\n",

"\n",

"In contradistinction to Neyman and Pearson’s formulation of the problem, in Wald’s formulation\n",

"\n",

"- The sample size $ n $ is not fixed but rather a random variable. \n",

"- Two parameters $ A $ and $ B $ that are related to but distinct from Neyman and Pearson’s $ \\alpha $ and $ \\beta $;\n",

" $ A $ and $ B $ characterize cut-off rules that Wald uses to determine the random variable $ n $ as a function of random outcomes. \n",

"\n",

"\n",

"Here is how Wald sets up the problem.\n",

"\n",

"A decision-maker can observe a sequence of draws of a random variable $ z $.\n",

"\n",

"He (or she) wants to know which of two probability distributions $ f_0 $ or $ f_1 $ governs $ z $.\n",

"\n",

"To illustrate, let’s inspect some beta distributions.\n",

"\n",

"The density of a Beta probability distribution with parameters $ a $ and $ b $ is\n",

"\n",

"$$\n",

"f(z; a, b) = \\frac{\\Gamma(a+b) z^{a-1} (1-z)^{b-1}}{\\Gamma(a) \\Gamma(b)}\n",

"\\quad \\text{where} \\quad\n",

"\\Gamma(t) := \\int_{0}^{\\infty} x^{t-1} e^{-x} dx\n",

"$$\n",

"\n",

"The next figure shows two beta distributions."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "1c9f677f",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"@njit\n",

"def p(x, a, b):\n",

" r = gamma(a + b) / (gamma(a) * gamma(b))\n",

" return r * x**(a-1) * (1 - x)**(b-1)\n",

"\n",

"f0 = lambda x: p(x, 1, 1)\n",

"f1 = lambda x: p(x, 9, 9)\n",

"grid = np.linspace(0, 1, 50)\n",

"\n",

"fig, ax = plt.subplots(figsize=(10, 8))\n",

"\n",

"ax.set_title(\"Original Distributions\")\n",

"ax.plot(grid, f0(grid), lw=2, label=\"$f_0$\")\n",

"ax.plot(grid, f1(grid), lw=2, label=\"$f_1$\")\n",

"\n",

"ax.legend()\n",

"ax.set(xlabel=\"$z$ values\", ylabel=\"probability of $z_k$\")\n",

"\n",

"plt.tight_layout()\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "6a85013f",

"metadata": {},

"source": [

"Conditional on knowing that successive observations are drawn from distribution $ f_0 $, the sequence of\n",

"random variables is independently and identically distributed (IID).\n",

"\n",

"Conditional on knowing that successive observations are drawn from distribution $ f_1 $, the sequence of\n",

"random variables is also independently and identically distributed (IID).\n",

"\n",

"But the observer does not know which of the two distributions generated the sequence.\n",

"\n",

"For reasons explained in [Exchangeability and Bayesian Updating](https://python.quantecon.org/exchangeable.html), this means that the sequence is not\n",

"IID.\n",

"\n",

"The observer has something to learn, namely, whether the observations are drawn from $ f_0 $ or from $ f_1 $.\n",

"\n",

"The decision maker wants to decide which of the two distributions is generating outcomes."

]

},

{

"cell_type": "markdown",

"id": "c7763a97",

"metadata": {},

"source": [

"### Type I and Type II Errors\n",

"\n",

"If we regard $ f=f_0 $ as a null hypothesis and $ f=f_1 $ as an alternative hypothesis,\n",

"then\n",

"\n",

"- a type I error is an incorrect rejection of a true null hypothesis (a “false positive”) \n",

"- a type II error is a failure to reject a false null hypothesis (a “false negative”) \n",

"\n",

"\n",

"To repeat ourselves\n",

"\n",

"- $ \\alpha $ is the probability of a type I error \n",

"- $ \\beta $ is the probability of a type II error "

]

},

{

"cell_type": "markdown",

"id": "e38a1398",

"metadata": {},

"source": [

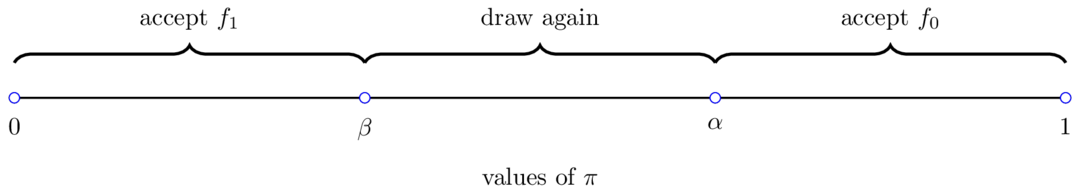

"### Choices\n",

"\n",

"After observing $ z_k, z_{k-1}, \\ldots, z_0 $, the decision-maker\n",

"chooses among three distinct actions:\n",

"\n",

"- He decides that $ f = f_0 $ and draws no more $ z $’s \n",

"- He decides that $ f = f_1 $ and draws no more $ z $’s \n",

"- He postpones deciding now and instead chooses to draw a\n",

" $ z_{k+1} $ \n",

"\n",

"\n",

"Wald proceeds as follows.\n",

"\n",

"He defines\n",

"\n",

"- $ p_{0m} = f_0(z_0) \\cdots f_0(z_m) $ \n",

"- $ p_{1m} = f_1(z_0) \\cdots f_1(z_m) $ \n",

"- $ L_{m} = \\frac{p_{1m}}{p_{0m}} $ \n",

"\n",

"\n",

"Here $ \\{L_m\\}_{m=0}^\\infty $ is a **likelihood ratio process**.\n",

"\n",

"One of Wald’s sequential decision rule is parameterized by two real numbers $ B < A $.\n",

"\n",

"For a given pair $ A, B $ the decision rule is\n",

"\n",

"$$\n",

"\\begin{aligned}\n",

"\\textrm { accept } f=f_1 \\textrm{ if } L_m \\geq A \\\\\n",

"\\textrm { accept } f=f_0 \\textrm{ if } L_m \\leq B \\\\\n",

"\\textrm { draw another } z \\textrm{ if } B < L_m < A\n",

"\\end{aligned}\n",

"$$\n",

"\n",

"The following figure illustrates aspects of Wald’s procedure.\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "4633c6b0",

"metadata": {},

"source": [

"## Links Between $ A,B $ and $ \\alpha, \\beta $\n",

"\n",

"In chapter 3 of **Sequential Analysis** [[Wald, 1947](https://python.quantecon.org/zreferences.html#id113)] Wald establishes the inequalities\n",

"\n",

"$$\n",

"\\begin{aligned} \n",

" \\frac{\\alpha}{1 -\\beta} & \\leq \\frac{1}{A} \\\\\n",

" \\frac{\\beta}{1 - \\alpha} & \\leq B \n",

"\\end{aligned}\n",

"$$\n",

"\n",

"His analysis of these inequalities leads Wald to recommend the following approximations as rules for setting\n",

"$ A $ and $ B $ that come close to attaining a decision maker’s target values for probabilities $ \\alpha $ of\n",

"a type I and $ \\beta $ of a type II error:\n",

"\n",

"\n",

"<a id='equation-eq-waldrule'></a>\n",

"$$\n",

"\\begin{aligned}\n",

"A \\approx a(\\alpha,\\beta) & \\equiv \\frac{1-\\beta}{\\alpha} \\\\\n",

"B \\approx b(\\alpha,\\beta) & \\equiv \\frac{\\beta}{1-\\alpha} \n",

"\\end{aligned} \\tag{22.1}\n",

"$$\n",

"\n",

"For small values of $ \\alpha $ and $ \\beta $, Wald shows that approximation [(22.1)](#equation-eq-waldrule) provides a good way to set $ A $ and $ B $.\n",

"\n",

"In particular, Wald constructs a mathematical argument that leads him to conclude that the use of approximation\n",

"[(22.1)](#equation-eq-waldrule) rather than the true functions $ A (\\alpha, \\beta), B(\\alpha,\\beta) $ for setting $ A $ and $ B $\n",

"\n",

"> $ \\ldots $ cannot result in any appreciable increase in the value of either $ \\alpha $ or $ \\beta $. In other words,\n",

"for all practical purposes the test corresponding to $ A = a(\\alpha, \\beta), B = b(\\alpha,\\beta) $ provides as\n",

"least the same protection against wrong decisions as the test corresponding to $ A = A(\\alpha, \\beta) $ and\n",

"$ B = b(\\alpha, \\beta) $.\n",

"\n",

"\n",

"> Thus, the only disadvantage that may arise from using $ a(\\alpha, \\beta), b(\\alpha,\\beta) $ instead of\n",

"$ A(\\alpha, \\beta), B(\\alpha,\\beta) $, respectively, is that it may result in an appreciable increase in\n",

"the number of observations required by the test."

]

},

{

"cell_type": "markdown",

"id": "6dfd62b1",

"metadata": {},

"source": [

"## Simulations\n",

"\n",

"In this section, we experiment with different distributions $ f_0 $ and $ f_1 $ to examine how Wald’s test performs under various conditions.\n",

"\n",

"The goal of these simulations is to understand trade-offs between decision speed and accuracy associated with Wald’s **sequential probability ratio test**.\n",

"\n",

"Specifically, we will watch how:\n",

"\n",

"- The decision thresholds $ A $ and $ B $ (or equivalently the target error rates $ \\alpha $ and $ \\beta $) affect the average stopping time \n",

"- The discrepancy between distributions $ f_0 $ and $ f_1 $ affects average stopping times \n",

"\n",

"\n",

"We will focus on the case where $ f_0 $ and $ f_1 $ are beta distributions since it is easy to control the overlapping regions of the two densities by adjusting their shape parameters.\n",

"\n",

"First, we define a namedtuple to store all the parameters we need for our simulation studies."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "a7740ed7",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"SPRTParams = namedtuple('SPRTParams', \n",

" ['α', 'β', # Target type I and type II errors\n",

" 'a0', 'b0', # Shape parameters for f_0\n",

" 'a1', 'b1', # Shape parameters for f_1\n",

" 'N', # Number of simulations\n",

" 'seed'])"

]

},

{

"cell_type": "markdown",

"id": "3e1947a5",

"metadata": {},

"source": [

"Now we can run the simulation following Wald’s recommendation.\n",

"\n",

"We use the log-likelihood ratio and compare it to the logarithms of the thresholds $ \\log(A) $ and $ \\log(B) $.\n",

"\n",

"Below is the algorithm for the simulation.\n",

"\n",

"1. Compute thresholds $ A = \\frac{1-\\beta}{\\alpha} $, $ B = \\frac{\\beta}{1-\\alpha} $ and work with $ \\log A $, $ \\log B $. \n",

"1. Given true distribution (either $ f_0 $ or $ f_1 $): \n",

" - Initialize log-likelihood ratio $ \\log L_0 = 0 $ \n",

" - Repeat: \n",

" - Draw observation $ z $ from the true distribution \n",

" - Update: $ \\log L_{n+1} \\leftarrow \\log L_n + (\\log f_1(z) - \\log f_0(z)) $ \n",

" - If $ \\log L_{n+1} \\geq \\log A $: stop, reject $ H_0 $ \n",

" - If $ \\log L_{n+1} \\leq \\log B $: stop, accept $ H_0 $ \n",

"1. Repeat step 2 for $ N $ replications with $ N/2 $ replications\n",

" for each distribution, compute the empirical type I error $ \\hat{\\alpha} $ and type II error $ \\hat{\\beta} $ with \n",

"\n",

"\n",

"$$\n",

"\\hat{\\alpha} = \\frac{\\text{\\# of times reject } H_0 \\text{ when } f_0 \\text{ is true}}{\\text{\\# of replications with } f_0 \\text{ true}}\n",

"$$\n",

"\n",

"$$\n",

"\\hat{\\beta} = \\frac{\\text{\\# of times accept } H_0 \\text{ when } f_1 \\text{ is true}}{\\text{\\# of replications with } f_1 \\text{ true}}\n",

"$$"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "74389a00",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"@njit\n",

"def sprt_single_run(a0, b0, a1, b1, logA, logB, true_f0, seed):\n",

" \"\"\"Run a single SPRT until a decision is reached.\"\"\"\n",

" log_L = 0.0\n",

" n = 0\n",

" \n",

" # Set seed for this run\n",

" np.random.seed(seed)\n",

" \n",

" while True:\n",

" # Draw a random variable from the appropriate distribution\n",

" if true_f0:\n",

" z = np.random.beta(a0, b0)\n",

" else:\n",

" z = np.random.beta(a1, b1)\n",

" \n",

" n += 1\n",

" \n",

" # Update the log-likelihood ratio\n",

" log_f1_z = np.log(p(z, a1, b1))\n",

" log_f0_z = np.log(p(z, a0, b0))\n",

" log_L += log_f1_z - log_f0_z\n",

" \n",

" # Check stopping conditions\n",

" if log_L >= logA:\n",

" return n, False # Reject H0\n",

" elif log_L <= logB:\n",

" return n, True # Accept H0\n",

"\n",

"@njit(parallel=True)\n",

"def run_sprt_simulation(a0, b0, a1, b1, alpha, βs, N, seed):\n",

" \"\"\"SPRT simulation described by the algorithm.\"\"\"\n",

" \n",

" # Calculate thresholds\n",

" A = (1 - βs) / alpha\n",

" B = βs / (1 - alpha)\n",

" logA = np.log(A)\n",

" logB = np.log(B)\n",

" \n",

" # Pre-allocate arrays\n",

" stopping_times = np.zeros(N, dtype=np.int64)\n",

"\n",

" # Store decision and ground truth as boolean arrays\n",

" decisions_h0 = np.zeros(N, dtype=np.bool_)\n",

" truth_h0 = np.zeros(N, dtype=np.bool_)\n",

" \n",

" # Run simulations in parallel\n",

" for i in prange(N):\n",

" true_f0 = (i % 2 == 0)\n",

" truth_h0[i] = true_f0\n",

" \n",

" n, accept_f0 = sprt_single_run(\n",

" a0, b0, a1, b1, \n",

" logA, logB, \n",

" true_f0, seed + i)\n",

"\n",

" stopping_times[i] = n\n",

" decisions_h0[i] = accept_f0\n",

" \n",

" return stopping_times, decisions_h0, truth_h0\n",

"\n",

"def run_sprt(params):\n",

" \"\"\"Run SPRT simulations with given parameters.\"\"\"\n",

" \n",

" stopping_times, decisions_h0, truth_h0 = run_sprt_simulation(\n",

" params.a0, params.b0, params.a1, params.b1, \n",

" params.α, params.β, params.N, params.seed\n",

" )\n",

" \n",

" # Calculate error rates\n",

" truth_h0_bool = truth_h0.astype(bool)\n",

" decisions_h0_bool = decisions_h0.astype(bool)\n",

"\n",

" # For type I error: P(reject H0 | H0 is true)\n",

" type_I = np.sum(truth_h0_bool \n",

" & ~decisions_h0_bool) / np.sum(truth_h0_bool)\n",

" \n",

" # For type II error: P(accept H0 | H0 is false) \n",

" type_II = np.sum(~truth_h0_bool \n",

" & decisions_h0_bool) / np.sum(~truth_h0_bool)\n",

" \n",

" # Create scipy distributions for compatibility\n",

" f0 = beta(params.a0, params.b0)\n",

" f1 = beta(params.a1, params.b1)\n",

" \n",

" return {\n",

" 'stopping_times': stopping_times,\n",

" 'decisions_h0': decisions_h0_bool,\n",

" 'truth_h0': truth_h0_bool,\n",

" 'type_I': type_I,\n",

" 'type_II': type_II,\n",

" 'f0': f0,\n",

" 'f1': f1\n",

" }\n",

" \n",

"# Run simulation\n",

"params = SPRTParams(α=0.05, β=0.10, a0=2, b0=5, a1=5, b1=2, N=20000, seed=1)\n",

"results = run_sprt(params)\n",

"\n",

"print(f\"Average stopping time: {results['stopping_times'].mean():.2f}\")\n",

"print(f\"Empirical type I error: {results['type_I']:.3f} (target = {params.α})\")\n",

"print(f\"Empirical type II error: {results['type_II']:.3f} (target = {params.β})\")"

]

},

{

"cell_type": "markdown",

"id": "97218c78",

"metadata": {},

"source": [

"As anticipated in the passage above in which Wald discussed the quality of\n",

"$ a(\\alpha, \\beta), b(\\alpha, \\beta) $ given in approximation [(22.1)](#equation-eq-waldrule),\n",

"we find that the algorithm “overshoots” the error rates by giving us a\n",

"lower type I and type II error rates than the target values.\n",

"\n",

">**Note**\n",

">\n",

">For recent work on the quality of approximation [(22.1)](#equation-eq-waldrule), see, e.g., [[Fischer and Ramdas, 2024](https://python.quantecon.org/zreferences.html#id263)].\n",

"\n",

"The following code constructs a graph that lets us visualize two distributions and the distribution of times to reach a decision."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "0a2f7b71",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"fig, axes = plt.subplots(1, 2, figsize=(14, 5))\n",

"\n",

"z_grid = np.linspace(0, 1, 200)\n",

"axes[0].plot(z_grid, results['f0'].pdf(z_grid), 'b-', \n",

" lw=2, label=f'$f_0 = \\\\text{{Beta}}({params.a0},{params.b0})$')\n",

"axes[0].plot(z_grid, results['f1'].pdf(z_grid), 'r-', \n",

" lw=2, label=f'$f_1 = \\\\text{{Beta}}({params.a1},{params.b1})$')\n",

"axes[0].fill_between(z_grid, 0, \n",

" np.minimum(results['f0'].pdf(z_grid), \n",

" results['f1'].pdf(z_grid)), \n",

" alpha=0.3, color='purple', label='overlap region')\n",

"axes[0].set_xlabel('z')\n",

"axes[0].set_ylabel('density')\n",

"axes[0].legend()\n",

"\n",

"axes[1].hist(results['stopping_times'], \n",

" bins=np.arange(1, results['stopping_times'].max() + 1.5) - 0.5,\n",

" color=\"steelblue\", alpha=0.8, edgecolor=\"black\")\n",

"axes[1].set_title(\"distribution of stopping times $n$\")\n",

"axes[1].set_xlabel(\"$n$\")\n",

"axes[1].set_ylabel(\"frequency\")\n",

"\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "9c1d61b3",

"metadata": {},

"source": [

"In this simple case, the stopping time stays below 10.\n",

"\n",

"We can also examine a $ 2 \\times 2 $ “confusion matrix” whose diagonal elements\n",

"show the number of times when Wald’s rule results in correct acceptance and\n",

"rejection of the null hypothesis."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "a98b99fa",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"# Accept H0 when H0 is true (correct)\n",

"f0_correct = np.sum(results['truth_h0'] & results['decisions_h0'])\n",

"\n",

"# Reject H0 when H0 is true (incorrect)\n",

"f0_incorrect = np.sum(results['truth_h0'] & (~results['decisions_h0']))\n",

"\n",

"# Reject H0 when H1 is true (correct)\n",

"f1_correct = np.sum((~results['truth_h0']) & (~results['decisions_h0']))\n",

"\n",

"# Accept H0 when H1 is true (incorrect)\n",

"f1_incorrect = np.sum((~results['truth_h0']) & results['decisions_h0'])\n",

"\n",

"# First row is when f0 is the true distribution\n",

"# Second row is when f1 is true\n",

"confusion_data = np.array([[f0_correct, f0_incorrect],\n",

" [f1_incorrect, f1_correct]])\n",

"\n",

"row_totals = confusion_data.sum(axis=1, keepdims=True)\n",

"\n",

"print(\"Confusion Matrix:\")\n",

"print(confusion_data)\n",

"\n",

"fig, ax = plt.subplots()\n",

"ax.imshow(confusion_data, cmap='Blues', aspect='equal')\n",

"ax.set_xticks([0, 1])\n",

"ax.set_xticklabels(['accept $H_0$', 'reject $H_0$'])\n",

"ax.set_yticks([0, 1])\n",

"ax.set_yticklabels(['true $f_0$', 'true $f_1$'])\n",

"\n",

"for i in range(2):\n",

" for j in range(2):\n",

" percent = confusion_data[i, j] / row_totals[i, 0] if row_totals[i, 0] > 0 else 0\n",

" color = 'white' if confusion_data[i, j] > confusion_data.max() * 0.5 else 'black'\n",

" ax.text(j, i, f'{confusion_data[i, j]}\\n({percent:.1%})',\n",

" ha=\"center\", va=\"center\", \n",

" color=color, fontweight='bold')\n",

"plt.tight_layout()\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "0c649fd5",

"metadata": {},

"source": [

"Next we use our code to study three different $ f_0, f_1 $ pairs having different discrepancies between distributions.\n",

"\n",

"We plot the same three graphs we used above for each pair of distributions"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "4e1825bd",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"params_1 = SPRTParams(α=0.05, β=0.10, a0=2, b0=8, a1=8, b1=2, N=5000, seed=42)\n",

"results_1 = run_sprt(params_1)\n",

"\n",

"params_2 = SPRTParams(α=0.05, β=0.10, a0=4, b0=5, a1=5, b1=4, N=5000, seed=42)\n",

"results_2 = run_sprt(params_2)\n",

"\n",

"params_3 = SPRTParams(α=0.05, β=0.10, a0=0.5, b0=0.4, a1=0.4, \n",

" b1=0.5, N=5000, seed=42)\n",

"results_3 = run_sprt(params_3)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "e8ee2f5e",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"def plot_sprt_results(results, params, title=\"\"):\n",

" \"\"\"Plot SPRT simulation results.\"\"\"\n",

" fig, axes = plt.subplots(1, 3, figsize=(22, 8))\n",

" \n",

" # Distribution plots\n",

" z_grid = np.linspace(0, 1, 200)\n",

" axes[0].plot(z_grid, results['f0'].pdf(z_grid), 'b-', lw=2, \n",

" label=f'$f_0 = \\\\text{{Beta}}({params.a0},{params.b0})$')\n",

" axes[0].plot(z_grid, results['f1'].pdf(z_grid), 'r-', lw=2, \n",

" label=f'$f_1 = \\\\text{{Beta}}({params.a1},{params.b1})$')\n",

" axes[0].fill_between(z_grid, 0, \n",

" np.minimum(results['f0'].pdf(z_grid), results['f1'].pdf(z_grid)), \n",

" alpha=0.3, color='purple', label='overlap')\n",

" if title:\n",

" axes[0].set_title(title, fontsize=25)\n",

" axes[0].set_xlabel('z', fontsize=25)\n",

" axes[0].set_ylabel('density', fontsize=25)\n",

" axes[0].legend(fontsize=18)\n",

" axes[0].tick_params(axis='both', which='major', labelsize=18)\n",

" \n",

" # Stopping times\n",

" max_n = max(results['stopping_times'].max(), 101)\n",

" bins = np.arange(1, min(max_n, 101)) - 0.5\n",

" axes[1].hist(results['stopping_times'], bins=bins, \n",

" color=\"steelblue\", alpha=0.8, edgecolor=\"black\")\n",

" axes[1].set_title(f'stopping times (mean={results[\"stopping_times\"].mean():.1f})', \n",

" fontsize=25)\n",

" axes[1].set_xlabel('n', fontsize=25)\n",

" axes[1].set_ylabel('frequency', fontsize=25)\n",

" axes[1].set_xlim(0, 100)\n",

" axes[1].tick_params(axis='both', which='major', labelsize=18)\n",

" \n",

" # Confusion matrix\n",

" f0_correct = np.sum(results['truth_h0'] & results['decisions_h0'])\n",

" f0_incorrect = np.sum(results['truth_h0'] & (~results['decisions_h0']))\n",

" f1_correct = np.sum((~results['truth_h0']) & (~results['decisions_h0']))\n",

" f1_incorrect = np.sum((~results['truth_h0']) & results['decisions_h0'])\n",

" \n",

" confusion_data = np.array([[f0_correct, f0_incorrect], \n",

" [f1_incorrect, f1_correct]])\n",

" row_totals = confusion_data.sum(axis=1, keepdims=True)\n",

" \n",

" im = axes[2].imshow(confusion_data, cmap='Blues', aspect='equal')\n",

" axes[2].set_title(f'errors: I={results[\"type_I\"]:.3f} '+ \n",

" f'II={results[\"type_II\"]:.3f}', fontsize=25)\n",

" axes[2].set_xticks([0, 1])\n",

" axes[2].set_xticklabels(['accept $H_0$', 'reject $H_0$'], fontsize=22)\n",

" axes[2].set_yticks([0, 1])\n",

" axes[2].set_yticklabels(['true $f_0$', 'true $f_1$'], fontsize=22)\n",

" axes[2].tick_params(axis='both', which='major', labelsize=18)\n",

"\n",

" \n",

" for i in range(2):\n",

" for j in range(2):\n",

" percent = confusion_data[i, j] / row_totals[i, 0] if row_totals[i, 0] > 0 else 0\n",

" color = 'white' if confusion_data[i, j] > confusion_data.max() * 0.5 else 'black'\n",

" axes[2].text(j, i, f'{confusion_data[i, j]}\\n({percent:.1%})',\n",

" ha=\"center\", va=\"center\", \n",

" color=color, fontweight='bold', \n",

" fontsize=18)\n",

"\n",

" plt.tight_layout()\n",

" plt.show()"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "83c6779b",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"plot_sprt_results(results_1, params_1)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "5004f2cc",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"plot_sprt_results(results_2, params_2)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "8b7a00f2",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"plot_sprt_results(results_3, params_3)"

]

},

{

"cell_type": "markdown",

"id": "855cb977",

"metadata": {},

"source": [

"We can see a clear pattern in the stopping times and how close “separated” the two distributions are.\n",

"\n",

"We can link this to the discussion of [Kullback–Leibler divergence](https://python.quantecon.org/likelihood_ratio_process.html#rel-entropy) in [this lecture](https://python.quantecon.org/likelihood_ratio_process.html).\n",

"\n",

"Intuitively, KL divergence is large when the distribution from one distribution to another is\n",

"large.\n",

"\n",

"When two distributions are “far apart”, it should not take long to decide which one is generating the data.\n",

"\n",

"When two distributions are “close” to each other, it takes longer to decide which one is generating the data.\n",

"\n",

"However, KL divergence is not symmetric, meaning that the divergence from one distribution to another is not necessarily the same as the reverse.\n",

"\n",

"To measure the discrepancy between two distributions, we use a metric\n",

"called [Jensen-Shannon distance](https://docs.scipy.org/doc/scipy/reference/generated/scipy.spatial.distance.jensenshannon.html) and plot it against the average stopping times."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "024c308a",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"def kl_div(h, f):\n",

" \"\"\"KL divergence\"\"\"\n",

" integrand = lambda w: f(w) * np.log(f(w) / h(w))\n",

" val, _ = quad(integrand, 0, 1)\n",

" return val\n",

"\n",

"def js_dist(a0, b0, a1, b1):\n",

" \"\"\"Jensen–Shannon distance\"\"\"\n",

" f0 = lambda w: p(w, a0, b0)\n",

" f1 = lambda w: p(w, a1, b1)\n",

"\n",

" # Mixture\n",

" m = lambda w: 0.5*(f0(w) + f1(w))\n",

" return np.sqrt(0.5*kl_div(m, f0) + 0.5*kl_div(m, f1))\n",

" \n",

"def generate_β_pairs(N=100, T=10.0, d_min=0.5, d_max=9.5):\n",

" ds = np.linspace(d_min, d_max, N)\n",

" a0 = (T - ds) / 2\n",

" b0 = (T + ds) / 2\n",

" return list(zip(a0, b0, b0, a0))\n",

"\n",

"param_comb = generate_β_pairs()\n",

"\n",

"# Run simulations for each parameter combination\n",

"js_dists = []\n",

"mean_stopping_times = []\n",

"param_list = []\n",

"\n",

"for a0, b0, a1, b1 in param_comb:\n",

" # Compute KL divergence\n",

" js_div = js_dist(a1, b1, a0, b0)\n",

" \n",

" # Run SPRT simulation with a fixed set of parameters d d\n",

" params = SPRTParams(α=0.05, β=0.10, a0=a0, b0=b0, \n",

" a1=a1, b1=b1, N=5000, seed=42)\n",

" results = run_sprt(params)\n",

" \n",

" js_dists.append(js_div)\n",

" mean_stopping_times.append(results['stopping_times'].mean())\n",

" param_list.append((a0, b0, a1, b1))\n",

"\n",

"# Create the plot\n",

"fig, ax = plt.subplots(figsize=(6, 6))\n",

"\n",

"scatter = ax.scatter(js_dists, mean_stopping_times, \n",

" s=80, alpha=0.7, c=range(len(js_dists)),\n",

" linewidth=0.5)\n",

"\n",

"ax.set_xlabel('Jensen–Shannon distance', fontsize=14)\n",

"ax.set_ylabel('mean stopping time', fontsize=14)\n",

"\n",

"plt.tight_layout()\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "0a67c634",

"metadata": {},

"source": [

"The plot demonstrates a clear negative correlation between relative entropy and mean stopping time.\n",

"\n",

"As the KL divergence increases (distributions become more separated), the mean stopping time decreases exponentially.\n",

"\n",

"Below are sampled examples from the experiments we have above"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "8daabe85",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"selected_indices = [0, \n",

" len(param_comb)//6, \n",

" len(param_comb)//3, \n",

" len(param_comb)//2, \n",

" 2*len(param_comb)//3, \n",

" -1]\n",

"\n",

"fig, axes = plt.subplots(2, 3, figsize=(15, 8))\n",

"\n",

"for i, idx in enumerate(selected_indices):\n",

" row = i // 3\n",

" col = i % 3\n",

" \n",

" a0, b0, a1, b1 = param_list[idx]\n",

" js_dist = js_dists[idx]\n",

" mean_time = mean_stopping_times[idx]\n",

" \n",

" # Plot the distributions\n",

" z_grid = np.linspace(0, 1, 200)\n",

" f0_dist = beta(a0, b0)\n",

" f1_dist = beta(a1, b1)\n",

" \n",

" axes[row, col].plot(z_grid, f0_dist.pdf(z_grid), 'b-', \n",

" lw=2, label='$f_0$')\n",

" axes[row, col].plot(z_grid, f1_dist.pdf(z_grid), 'r-', \n",

" lw=2, label='$f_1$')\n",

" axes[row, col].fill_between(z_grid, 0, \n",

" np.minimum(f0_dist.pdf(z_grid), \n",

" f1_dist.pdf(z_grid)), \n",

" alpha=0.3, color='purple')\n",

" \n",

" axes[row, col].set_title(f'JS dist: {js_dist:.3f}'\n",

" +f'\\nMean time: {mean_time:.1f}', fontsize=12)\n",

" axes[row, col].set_xlabel('z', fontsize=10)\n",

" if i == 0:\n",

" axes[row, col].set_ylabel('density', fontsize=10)\n",

" axes[row, col].legend(fontsize=10)\n",

"\n",

"\n",

"plt.tight_layout()\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "b0a53967",

"metadata": {},

"source": [

"Again, we find that the stopping time is shorter when the distributions are more separated\n",

"measured by Jensen-Shannon distance.\n",

"\n",

"Let’s visualize individual likelihood ratio processes to see how they evolve toward the decision boundaries."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "487470d4",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"def plot_likelihood_paths(params, n_highlight=10, n_background=200):\n",

" \"\"\"Plot likelihood ratio paths\"\"\"\n",

" \n",

" A = (1 - params.β) / params.α\n",

" B = params.β / (1 - params.α)\n",

" logA, logB = np.log(A), np.log(B)\n",

" \n",

" f0 = beta(params.a0, params.b0)\n",

" f1 = beta(params.a1, params.b1)\n",

" \n",

" fig, axes = plt.subplots(1, 2, figsize=(14, 7))\n",

" \n",

" # Generate and plot paths for each distribution\n",

" for dist_idx, (true_f0, ax, title) in enumerate([\n",

" (True, axes[0], 'true distribution: $f_0$'),\n",

" (False, axes[1], 'true distribution: $f_1$')\n",

" ]):\n",

" rng = np.random.default_rng(seed=42 + dist_idx)\n",

" paths_data = []\n",

" \n",

" for path in range(n_background + n_highlight):\n",

" log_L_path = [0.0] # Start at 0\n",

" log_L = 0.0\n",

" n = 0\n",

" \n",

" while True:\n",

" z = f0.rvs(random_state=rng) if true_f0 else f1.rvs(random_state=rng)\n",

" n += 1\n",

" log_L += np.log(f1.pdf(z)) - np.log(f0.pdf(z))\n",

" log_L_path.append(log_L)\n",

" \n",

" # Check stopping conditions\n",

" if log_L >= logA or log_L <= logB:\n",

" # True = reject H0, False = accept H0\n",

" decision = log_L >= logA\n",

" break\n",

" \n",

" paths_data.append((log_L_path, n, decision))\n",

" \n",

" for i, (path, n, decision) in enumerate(paths_data[:n_background]):\n",

" color = 'C1' if decision else 'C0'\n",

" ax.plot(range(len(path)), path, \n",

" color=color, alpha=0.2, linewidth=0.5)\n",

" \n",

" for i, (path, n, decision) in enumerate(paths_data[n_background:]):\n",

" # Color code by decision\n",

" color = 'C1' if decision else 'C0'\n",

" ax.plot(range(len(path)), path, color=color, \n",

" alpha=0.8, linewidth=1.5,\n",

" label='reject $H_0$' if decision and i == 0 else (\n",

" 'accept $H_0$' if not decision and i == 0 else ''))\n",

" \n",

" ax.axhline(y=logA, color='C1', linestyle='--', linewidth=2, \n",

" label=f'$\\\\log A = {logA:.2f}$')\n",

" ax.axhline(y=logB, color='C0', linestyle='--', linewidth=2, \n",

" label=f'$\\\\log B = {logB:.2f}$')\n",

" ax.axhline(y=0, color='black', linestyle='-', \n",

" alpha=0.5, linewidth=1)\n",

" \n",

" ax.set_xlabel(r'$n$')\n",

" ax.set_ylabel(r'$log(L_n)$')\n",

" ax.set_title(title, fontsize=20)\n",

" ax.legend(fontsize=18, loc='center right')\n",

" \n",

" y_margin = max(abs(logA), abs(logB)) * 0.2\n",

" ax.set_ylim(logB - y_margin, logA + y_margin)\n",

" \n",

" plt.tight_layout()\n",

" plt.show()\n",

"\n",

"plot_likelihood_paths(params_3, n_highlight=10, n_background=100)"

]

},

{

"cell_type": "markdown",

"id": "50582515",

"metadata": {},

"source": [

"Next, let’s adjust the decision thresholds $ A $ and $ B $ and examine how the mean stopping time and the type I and type II error rates change.\n",

"\n",

"In the code below, we break Wald’s rule by adjusting the thresholds $ A $ and $ B $ using factors $ A_f $ and $ B_f $."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "96885f98",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"@njit(parallel=True) \n",

"def run_adjusted_thresholds(a0, b0, a1, b1, alpha, βs, N, seed, A_f, B_f):\n",

" \"\"\"SPRT simulation with adjusted thresholds.\"\"\"\n",

" \n",

" # Calculate original thresholds \n",

" A_original = (1 - βs) / alpha\n",

" B_original = βs / (1 - alpha)\n",

" \n",

" # Apply adjustment factors\n",

" A_adj = A_original * A_f\n",

" B_adj = B_original * B_f\n",

" logA = np.log(A_adj)\n",

" logB = np.log(B_adj)\n",

" \n",

" # Pre-allocate arrays\n",

" stopping_times = np.zeros(N, dtype=np.int64)\n",

" decisions_h0 = np.zeros(N, dtype=np.bool_)\n",

" truth_h0 = np.zeros(N, dtype=np.bool_)\n",

" \n",

" # Run simulations in parallel\n",

" for i in prange(N):\n",

" true_f0 = (i % 2 == 0)\n",

" truth_h0[i] = true_f0\n",

" \n",

" n, accept_f0 = sprt_single_run(a0, b0, a1, b1, \n",

" logA, logB, true_f0, seed + i)\n",

" stopping_times[i] = n\n",

" decisions_h0[i] = accept_f0\n",

" \n",

" return stopping_times, decisions_h0, truth_h0, A_adj, B_adj\n",

"\n",

"def run_adjusted(params, A_f=1.0, B_f=1.0):\n",

" \"\"\"Wrapper to run SPRT with adjusted A and B thresholds.\"\"\"\n",

" \n",

" stopping_times, decisions_h0, truth_h0, A_adj, B_adj = run_adjusted_thresholds(\n",

" params.a0, params.b0, params.a1, params.b1, \n",

" params.α, params.β, params.N, params.seed, A_f, B_f\n",

" )\n",

" truth_h0_bool = truth_h0.astype(bool)\n",

" decisions_h0_bool = decisions_h0.astype(bool)\n",

" \n",

" # Calculate error rates\n",

" type_I = np.sum(truth_h0_bool \n",

" & ~decisions_h0_bool) / np.sum(truth_h0_bool)\n",

" type_II = np.sum(~truth_h0_bool \n",

" & decisions_h0_bool) / np.sum(~truth_h0_bool)\n",

" \n",

" return {\n",

" 'stopping_times': stopping_times,\n",

" 'type_I': type_I,\n",

" 'type_II': type_II,\n",

" 'A_used': A_adj,\n",

" 'B_used': B_adj\n",

" }\n",

"\n",

"adjustments = [\n",

" (5.0, 0.5), \n",

" (1.0, 1.0), \n",

" (0.3, 3.0), \n",

" (0.2, 5.0), \n",

" (0.15, 7.0), \n",

"]\n",

"\n",

"results_table = []\n",

"for A_f, B_f in adjustments:\n",

" result = run_adjusted(params_2, A_f, B_f)\n",

" results_table.append([\n",

" A_f, B_f, \n",

" f\"{result['stopping_times'].mean():.1f}\",\n",

" f\"{result['type_I']:.3f}\",\n",

" f\"{result['type_II']:.3f}\"\n",

" ])\n",

"\n",

"df = pd.DataFrame(results_table, \n",

" columns=[\"A_f\", \"B_f\", \"mean stop time\", \n",

" \"Type I error\", \"Type II error\"])\n",

"df = df.set_index([\"A_f\", \"B_f\"])\n",

"df"

]

},

{

"cell_type": "markdown",

"id": "3756b839",

"metadata": {},

"source": [

"Let’s pause and think about the table more carefully by referring back to [(22.1)](#equation-eq-waldrule).\n",

"\n",

"Recall that $ A = \\frac{1-\\beta}{\\alpha} $ and $ B = \\frac{\\beta}{1-\\alpha} $.\n",

"\n",

"When we multiply $ A $ by a factor less than 1 (making $ A $ smaller), we are effectively making it easier to reject the null hypothesis $ H_0 $.\n",

"\n",

"This increases the probability of Type I errors.\n",

"\n",

"When we multiply $ B $ by a factor greater than 1 (making $ B $ larger), we are making it easier to accept the null hypothesis $ H_0 $.\n",

"\n",

"This increases the probability of Type II errors.\n",

"\n",

"The table confirms this intuition: as $ A $ decreases and $ B $ increases from their optimal Wald values, both Type I and Type II error rates increase, while the mean stopping time decreases."

]

},

{

"cell_type": "markdown",

"id": "1ebe649d",

"metadata": {},

"source": [

"## Related Lectures\n",

"\n",

"We’ll dig deeper into some of the ideas used here in the following earlier and later lectures:\n",

"\n",

"- [this sequel](https://python.quantecon.org/wald_friedman_2.html) reformulates the problem from the perspective of a **Bayesian statistician** who views\n",

" parameters as vectors of random variables that are jointly distributed with the observable that he is concerned about. \n",

"- [this lecture](https://python.quantecon.org/exchangeable.html) discusses the key concept of **exchangeability** that underlies statistical learning \n",

"- [this lecture](https://python.quantecon.org/likelihood_ratio_process.html) describes **likelihood ratio processes** and their role in frequentist and Bayesian statistical theories \n",

"- [this lecture](https://python.quantecon.org/likelihood_bayes.html) discusses the role of likelihood ratio processes in **Bayesian learning** \n",

"- [this lecture](https://python.quantecon.org/navy_captain.html) takes up the subject of this lecture and studies whether the Captain’s hunch that the (frequentist) decision rule that the Navy had ordered him to use can be expected to be better or worse than our sequential decision rule "

]

}

],

"metadata": {

"date": 1751374729.953858,

"filename": "wald_friedman.md",

"kernelspec": {

"display_name": "Python",

"language": "python3",

"name": "python3"

},

"title": "A Problem that Stumped Milton Friedman"

},

"nbformat": 4,

"nbformat_minor": 5

}