30. Finite Markov Chains#

Contents

In addition to what’s in Anaconda, this lecture will need the following libraries:

!pip install quantecon

Show code cell output

Requirement already satisfied: quantecon in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (0.8.1)

Requirement already satisfied: numba>=0.49.0 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from quantecon) (0.60.0)

Requirement already satisfied: numpy>=1.17.0 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from quantecon) (1.26.4)

Requirement already satisfied: requests in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from quantecon) (2.32.3)

Requirement already satisfied: scipy>=1.5.0 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from quantecon) (1.13.1)

Requirement already satisfied: sympy in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from quantecon) (1.14.0)

Requirement already satisfied: llvmlite<0.44,>=0.43.0dev0 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from numba>=0.49.0->quantecon) (0.43.0)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from requests->quantecon) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from requests->quantecon) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from requests->quantecon) (2.2.3)

Requirement already satisfied: certifi>=2017.4.17 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from requests->quantecon) (2024.8.30)

Requirement already satisfied: mpmath<1.4,>=1.1.0 in /home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages (from sympy->quantecon) (1.3.0)

30.1. Overview#

Markov chains are one of the most useful classes of stochastic processes, being

simple, flexible and supported by many elegant theoretical results

valuable for building intuition about random dynamic models

central to quantitative modeling in their own right

You will find them in many of the workhorse models of economics and finance.

In this lecture, we review some of the theory of Markov chains.

We will also introduce some of the high-quality routines for working with Markov chains available in QuantEcon.py.

Prerequisite knowledge is basic probability and linear algebra.

Let’s start with some standard imports:

import matplotlib.pyplot as plt

plt.rcParams["figure.figsize"] = (11, 5) #set default figure size

import quantecon as qe

import numpy as np

from mpl_toolkits.mplot3d import Axes3D

30.2. Definitions#

The following concepts are fundamental.

30.2.1. Stochastic Matrices#

A stochastic matrix (or Markov matrix) is an

each element of

each row of

Each row of

It is too not difficult to check 1 that if

30.2.2. Markov Chains#

There is a close connection between stochastic matrices and Markov chains.

To begin, let

The set

A Markov chain

This means that, for any date

In other words, knowing the current state is enough to know probabilities for future states.

In particular, the dynamics of a Markov chain are fully determined by the set of values

By construction,

We can view

Going the other way, if we take a stochastic matrix

draw

for each

By construction, the resulting process satisfies (30.2).

30.2.3. Example 1#

Consider a worker who, at any given time

Suppose that, over a one month period,

An unemployed worker finds a job with probability

An employed worker loses her job and becomes unemployed with probability

In terms of a Markov model, we have

We can write out the transition probabilities in matrix form as

Once we have the values

What is the average duration of unemployment?

Over the long-run, what fraction of time does a worker find herself unemployed?

Conditional on employment, what is the probability of becoming unemployed at least once over the next 12 months?

We’ll cover such applications below.

30.2.4. Example 2#

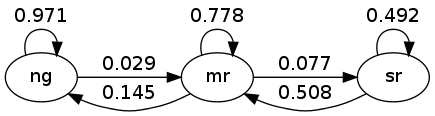

From US unemployment data, Hamilton [Hamilton, 2005] estimated the stochastic matrix

where

the frequency is monthly

the first state represents “normal growth”

the second state represents “mild recession”

the third state represents “severe recession”

For example, the matrix tells us that when the state is normal growth, the state will again be normal growth next month with probability 0.97.

In general, large values on the main diagonal indicate persistence in the process

This Markov process can also be represented as a directed graph, with edges labeled by transition probabilities

Here “ng” is normal growth, “mr” is mild recession, etc.

30.3. Simulation#

One natural way to answer questions about Markov chains is to simulate them.

(To approximate the probability of event

Nice functionality for simulating Markov chains exists in QuantEcon.py.

Efficient, bundled with lots of other useful routines for handling Markov chains.

However, it’s also a good exercise to roll our own routines — let’s do that first and then come back to the methods in QuantEcon.py.

In these exercises, we’ll take the state space to be

30.3.1. Rolling Our Own#

To simulate a Markov chain, we need its stochastic matrix

The Markov chain is then constructed as discussed above. To repeat:

At time

At each subsequent time

To implement this simulation procedure, we need a method for generating draws from a discrete distribution.

For this task, we’ll use random.draw from QuantEcon, which works as follows:

ψ = (0.3, 0.7) # probabilities over {0, 1}

cdf = np.cumsum(ψ) # convert into cummulative distribution

qe.random.draw(cdf, 5) # generate 5 independent draws from ψ

array([1, 1, 0, 1, 1])

We’ll write our code as a function that accepts the following three arguments

A stochastic matrix

PAn initial state

initA positive integer

sample_sizerepresenting the length of the time series the function should return

def mc_sample_path(P, ψ_0=None, sample_size=1_000):

# set up

P = np.asarray(P)

X = np.empty(sample_size, dtype=int)

# Convert each row of P into a cdf

n = len(P)

P_dist = [np.cumsum(P[i, :]) for i in range(n)]

# draw initial state, defaulting to 0

if ψ_0 is not None:

X_0 = qe.random.draw(np.cumsum(ψ_0))

else:

X_0 = 0

# simulate

X[0] = X_0

for t in range(sample_size - 1):

X[t+1] = qe.random.draw(P_dist[X[t]])

return X

Let’s see how it works using the small matrix

P = [[0.4, 0.6],

[0.2, 0.8]]

As we’ll see later, for a long series drawn from P, the fraction of the sample that takes value 0 will be about 0.25.

Moreover, this is true, regardless of the initial distribution from which

The following code illustrates this

X = mc_sample_path(P, ψ_0=[0.1, 0.9], sample_size=100_000)

np.mean(X == 0)

0.24633

You can try changing the initial distribution to confirm that the output is

always close to 0.25, at least for the P matrix above.

30.3.2. Using QuantEcon’s Routines#

As discussed above, QuantEcon.py has routines for handling Markov chains, including simulation.

Here’s an illustration using the same P as the preceding example

from quantecon import MarkovChain

mc = qe.MarkovChain(P)

X = mc.simulate(ts_length=1_000_000)

np.mean(X == 0)

0.251298

The QuantEcon.py routine is JIT compiled and much faster.

%time mc_sample_path(P, sample_size=1_000_000) # Our homemade code version

CPU times: user 1.3 s, sys: 3.89 ms, total: 1.31 s

Wall time: 1.31 s

array([0, 0, 1, ..., 0, 1, 1])

%time mc.simulate(ts_length=1_000_000) # qe code version

CPU times: user 17.7 ms, sys: 2.99 ms, total: 20.7 ms

Wall time: 20.2 ms

array([1, 0, 1, ..., 1, 1, 1])

30.3.2.1. Adding State Values and Initial Conditions#

If we wish to, we can provide a specification of state values to MarkovChain.

These state values can be integers, floats, or even strings.

The following code illustrates

mc = qe.MarkovChain(P, state_values=('unemployed', 'employed'))

mc.simulate(ts_length=4, init='employed')

array(['employed', 'employed', 'unemployed', 'employed'], dtype='<U10')

mc.simulate(ts_length=4, init='unemployed')

array(['unemployed', 'unemployed', 'unemployed', 'unemployed'],

dtype='<U10')

mc.simulate(ts_length=4) # Start at randomly chosen initial state

array(['unemployed', 'employed', 'employed', 'employed'], dtype='<U10')

If we want to see indices rather than state values as outputs as we can use

mc.simulate_indices(ts_length=4)

array([1, 1, 1, 1])

30.4. Marginal Distributions#

Suppose that

the marginal distribution of

What then is the marginal distribution of

To answer this, we let

Our first aim is to find

To begin, pick any

Using the law of total probability, we can decompose the probability that

In words, to get the probability of being at

Rewriting this statement in terms of marginal and conditional probabilities gives

There are

If we think of

Thus, to move a marginal distribution forward one unit of time, we postmultiply by

By postmultiplying

Hence, iterating on (30.4), the expression

As a special case, we see that if

This is very important, so let’s repeat it

and, more generally,

30.4.1. Multiple Step Transition Probabilities#

We know that the probability of transitioning from

It turns out that the probability of transitioning from

To see why, consider again (30.6), but now with a

1 in the

Inserting this into (30.6), we see that, conditional on

In particular

30.4.2. Example: Probability of Recession#

Recall the stochastic matrix

Suppose that the current state is unknown — perhaps statistics are available only at the end of the current month.

We guess that the probability that the economy is in state

The probability of being in recession (either mild or severe) in 6 months time is given by the inner product

30.4.3. Example 2: Cross-Sectional Distributions#

The marginal distributions we have been studying can be viewed either as probabilities or as cross-sectional frequencies that a Law of Large Numbers leads us to anticipate for large samples.

To illustrate, recall our model of employment/unemployment dynamics for a given worker discussed above.

Consider a large population of workers, each of whose lifetime experience is described by the specified dynamics, with each worker’s outcomes being realizations of processes that are statistically independent of all other workers’ processes.

Let

The cross-sectional distribution records fractions of workers employed and unemployed at a given moment.

For example,

What will the cross-sectional distribution be in 10 periods hence?

The answer is

This is because each worker’s state evolves according to

But when the sample is large, outcomes and probabilities are roughly equal (by an application of the Law of Large Numbers).

So for a very large (tending to infinite) population,

This is exactly the cross-sectional distribution.

30.5. Irreducibility and Aperiodicity#

Irreducibility and aperiodicity are central concepts of modern Markov chain theory.

Let’s see what they’re about.

30.5.1. Irreducibility#

Let

Two states

In view of our discussion above, this means precisely that

state

state

The stochastic matrix

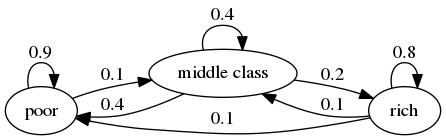

For example, consider the following transition probabilities for wealth of a fictitious set of households

We can translate this into a stochastic matrix, putting zeros where there’s no edge between nodes

It’s clear from the graph that this stochastic matrix is irreducible: we can eventually reach any state from any other state.

We can also test this using QuantEcon.py’s MarkovChain class

P = [[0.9, 0.1, 0.0],

[0.4, 0.4, 0.2],

[0.1, 0.1, 0.8]]

mc = qe.MarkovChain(P, ('poor', 'middle', 'rich'))

mc.is_irreducible

True

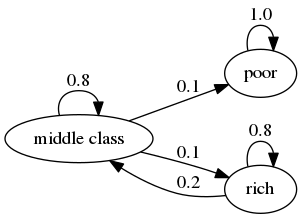

Here’s a more pessimistic scenario in which poor people remain poor forever

This stochastic matrix is not irreducible, since, for example, rich is not accessible from poor.

Let’s confirm this

P = [[1.0, 0.0, 0.0],

[0.1, 0.8, 0.1],

[0.0, 0.2, 0.8]]

mc = qe.MarkovChain(P, ('poor', 'middle', 'rich'))

mc.is_irreducible

False

We can also determine the “communication classes”

mc.communication_classes

[array(['poor'], dtype='<U6'), array(['middle', 'rich'], dtype='<U6')]

It might be clear to you already that irreducibility is going to be important in terms of long run outcomes.

For example, poverty is a life sentence in the second graph but not the first.

We’ll come back to this a bit later.

30.5.2. Aperiodicity#

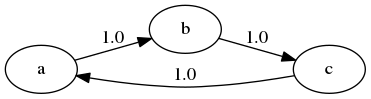

Loosely speaking, a Markov chain is called periodic if it cycles in a predictable way, and aperiodic otherwise.

Here’s a trivial example with three states

The chain cycles with period 3:

P = [[0, 1, 0],

[0, 0, 1],

[1, 0, 0]]

mc = qe.MarkovChain(P)

mc.period

3

More formally, the period of a state

In the last example,

A stochastic matrix is called aperiodic if the period of every state is 1, and periodic otherwise.

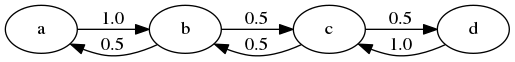

For example, the stochastic matrix associated with the transition probabilities below is periodic because, for example, state

We can confirm that the stochastic matrix is periodic with the following code

P = [[0.0, 1.0, 0.0, 0.0],

[0.5, 0.0, 0.5, 0.0],

[0.0, 0.5, 0.0, 0.5],

[0.0, 0.0, 1.0, 0.0]]

mc = qe.MarkovChain(P)

mc.period

2

mc.is_aperiodic

False

30.6. Stationary Distributions#

As seen in (30.4), we can shift a marginal distribution forward one unit of time via postmultiplication by

Some distributions are invariant under this updating process — for example,

P = np.array([[0.4, 0.6],

[0.2, 0.8]])

ψ = (0.25, 0.75)

ψ @ P

array([0.25, 0.75])

Such distributions are called stationary or invariant.

Formally, a marginal distribution

(This is the same notion of stationarity that we learned about in the lecture on AR(1) processes applied to a different setting.)

From this equality, we immediately get

This tells us an important fact: If the distribution of

Hence stationary distributions have a natural interpretation as stochastic steady states — we’ll discuss this more soon.

Mathematically, a stationary distribution is a fixed point of

Theorem. Every stochastic matrix

(We are assuming here that the state space

For proof of this result, you can apply Brouwer’s fixed point theorem, or see EDTC, theorem 4.3.5.

There can be many stationary distributions corresponding to a given stochastic matrix

For example, if

To get uniqueness an invariant distribution, the transition matrix

A subset of the state space is infinitely persistent if other parts of the state space cannot be accessed from it.

Thus, infinite persistence of a non-trivial subset is the opposite of irreducibility.

This gives some intuition for the following fundamental theorem.

Theorem. If

For any initial marginal distribution

For a proof, see, for example, theorem 5.2 of [Häggström, 2002].

(Note that part 1 of the theorem only requires irreducibility, whereas part 2 requires both irreducibility and aperiodicity)

A stochastic matrix that satisfies the conditions of the theorem is sometimes called uniformly ergodic.

A sufficient condition for aperiodicity and irreducibility is that every element of

Try to convince yourself of this.

30.6.1. Example#

Recall our model of the employment/unemployment dynamics of a particular worker discussed above.

Assuming

Let

Using

This is, in some sense, a steady state probability of unemployment — more about the interpretation of this below.

Not surprisingly it tends to zero as

30.6.2. Calculating Stationary Distributions#

As discussed above, a particular Markov matrix

That is, there can be many row vectors

In fact if

is a stationary distribution for

If we restrict attention to the case in which only one stationary distribution exists, one way to finding it is to solve the system

for

But the zero vector solves system (30.7), so we must proceed cautiously.

We want to impose the restriction that

There are various ways to do this.

One option is to regard solving system (30.7) as an eigenvector problem: a vector

A stable and sophisticated algorithm specialized for stochastic matrices is implemented in QuantEcon.py.

This is the one we recommend:

P = [[0.4, 0.6],

[0.2, 0.8]]

mc = qe.MarkovChain(P)

mc.stationary_distributions # Show all stationary distributions

array([[0.25, 0.75]])

30.6.3. Convergence to Stationarity#

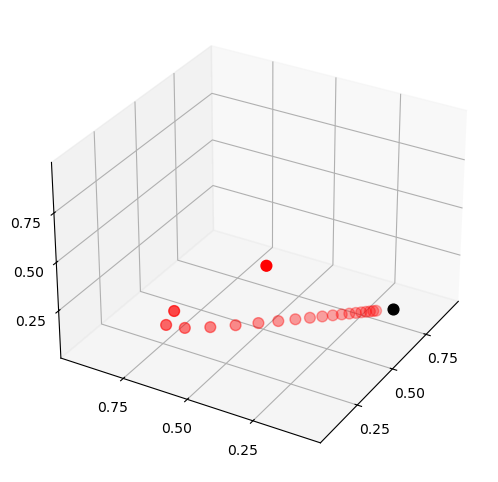

Part 2 of the Markov chain convergence theorem stated above tells us that the marginal distribution of

This adds considerable authority to our interpretation of

The convergence in the theorem is illustrated in the next figure

P = ((0.971, 0.029, 0.000),

(0.145, 0.778, 0.077),

(0.000, 0.508, 0.492))

P = np.array(P)

ψ = (0.0, 0.2, 0.8) # Initial condition

fig = plt.figure(figsize=(8, 6))

ax = fig.add_subplot(111, projection='3d')

ax.set(xlim=(0, 1), ylim=(0, 1), zlim=(0, 1),

xticks=(0.25, 0.5, 0.75),

yticks=(0.25, 0.5, 0.75),

zticks=(0.25, 0.5, 0.75))

x_vals, y_vals, z_vals = [], [], []

for t in range(20):

x_vals.append(ψ[0])

y_vals.append(ψ[1])

z_vals.append(ψ[2])

ψ = ψ @ P

ax.scatter(x_vals, y_vals, z_vals, c='r', s=60)

ax.view_init(30, 210)

mc = qe.MarkovChain(P)

ψ_star = mc.stationary_distributions[0]

ax.scatter(ψ_star[0], ψ_star[1], ψ_star[2], c='k', s=60)

plt.show()

Here

The highest red dot is an arbitrarily chosen initial marginal probability distribution

The other red dots are the marginal distributions

The black dot is

You might like to try experimenting with different initial conditions.

30.7. Ergodicity#

Under irreducibility, yet another important result obtains: for all

Here

convergence is with probability one

the result does not depend on the marginal distribution of

The result tells us that the fraction of time the chain spends at state

This gives us another way to interpret the stationary distribution — provided that the convergence result in (30.8) is valid.

The convergence asserted in (30.8) is a special case of a law of large numbers result for Markov chains — see EDTC, section 4.3.4 for some additional information.

30.7.1. Example#

Recall our cross-sectional interpretation of the employment/unemployment model discussed above.

Assume that

We saw that the stationary distribution is

In the cross-sectional interpretation, this is the fraction of people unemployed.

In view of our latest (ergodicity) result, it is also the fraction of time that a single worker can expect to spend unemployed.

Thus, in the long-run, cross-sectional averages for a population and time-series averages for a given person coincide.

This is one aspect of the concept of ergodicity.

30.8. Computing Expectations#

We sometimes want to compute mathematical expectations of functions of

and conditional expectations such as

where

Computing the unconditional expectation (30.9) is easy.

We just sum over the marginal distribution of

Here

Since

For the conditional expectation (30.10), we need to sum over

the conditional distribution of

We already know that this is

The vector

30.8.1. Iterated Expectations#

The law of iterated expectations states that

where the outer

To verify the law of iterated expectations, use equation (30.11) to substitute

and note

30.8.2. Expectations of Geometric Sums#

Sometimes we want to compute the mathematical expectation of a geometric sum, such as

In view of the preceding discussion, this is

where

Premultiplication by

30.9. Exercises#

Exercise 30.1

According to the discussion above, if a worker’s employment dynamics obey the stochastic matrix

with

In other words, if

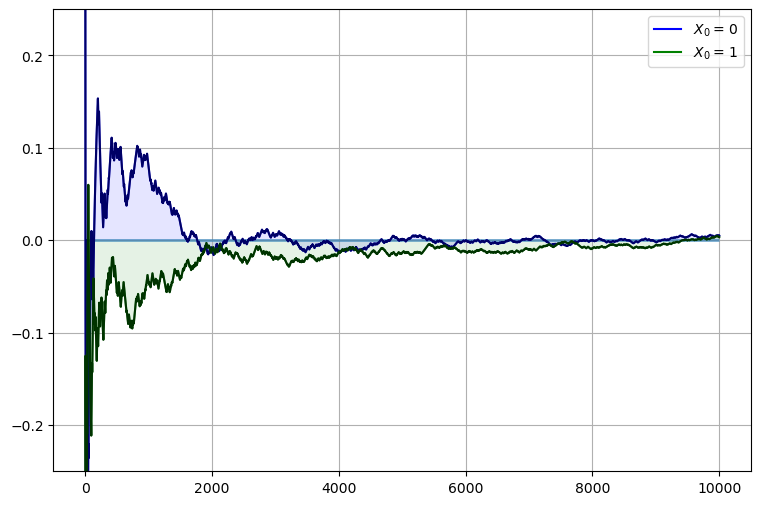

This exercise asks you to illustrate convergence by computing

You will see that this statement is true regardless of the choice of initial

condition or the values of

Solution to Exercise 30.1

We will address this exercise graphically.

The plots show the time series of

As

α = β = 0.1

N = 10000

p = β / (α + β)

P = ((1 - α, α), # Careful: P and p are distinct

( β, 1 - β))

mc = MarkovChain(P)

fig, ax = plt.subplots(figsize=(9, 6))

ax.set_ylim(-0.25, 0.25)

ax.grid()

ax.hlines(0, 0, N, lw=2, alpha=0.6) # Horizonal line at zero

for x0, col in ((0, 'blue'), (1, 'green')):

# Generate time series for worker that starts at x0

X = mc.simulate(N, init=x0)

# Compute fraction of time spent unemployed, for each n

X_bar = (X == 0).cumsum() / (1 + np.arange(N, dtype=float))

# Plot

ax.fill_between(range(N), np.zeros(N), X_bar - p, color=col, alpha=0.1)

ax.plot(X_bar - p, color=col, label=f'$X_0 = \, {x0} $')

# Overlay in black--make lines clearer

ax.plot(X_bar - p, 'k-', alpha=0.6)

ax.legend(loc='upper right')

plt.show()

<>:21: SyntaxWarning: invalid escape sequence '\,'

<>:21: SyntaxWarning: invalid escape sequence '\,'

/tmp/ipykernel_6077/221373905.py:21: SyntaxWarning: invalid escape sequence '\,'

ax.plot(X_bar - p, color=col, label=f'$X_0 = \, {x0} $')

Exercise 30.2

A topic of interest for economics and many other disciplines is ranking.

Let’s now consider one of the most practical and important ranking problems — the rank assigned to web pages by search engines.

(Although the problem is motivated from outside of economics, there is in fact a deep connection between search ranking systems and prices in certain competitive equilibria — see [Du et al., 2013].)

To understand the issue, consider the set of results returned by a query to a web search engine.

For the user, it is desirable to

receive a large set of accurate matches

have the matches returned in order, where the order corresponds to some measure of “importance”

Ranking according to a measure of importance is the problem we now consider.

The methodology developed to solve this problem by Google founders Larry Page and Sergey Brin is known as PageRank.

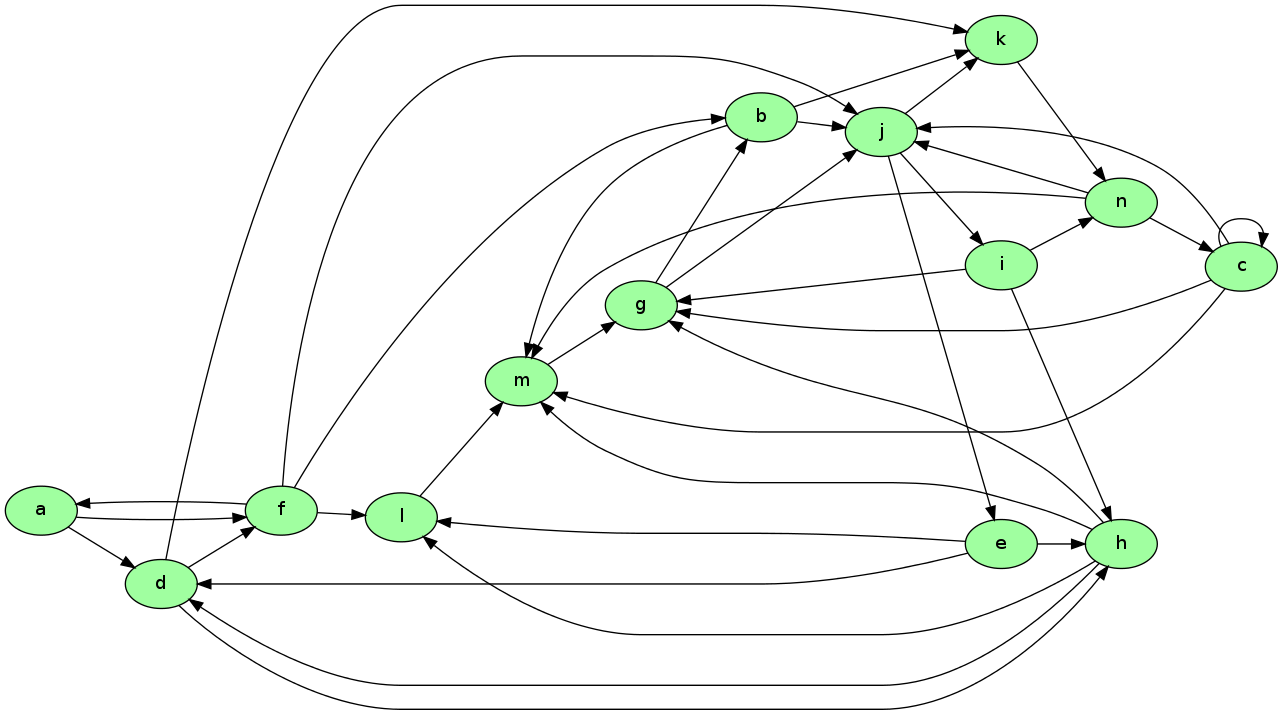

To illustrate the idea, consider the following diagram

Imagine that this is a miniature version of the WWW, with

each node representing a web page

each arrow representing the existence of a link from one page to another

Now let’s think about which pages are likely to be important, in the sense of being valuable to a search engine user.

One possible criterion for the importance of a page is the number of inbound links — an indication of popularity.

By this measure, m and j are the most important pages, with 5 inbound links each.

However, what if the pages linking to m, say, are not themselves important?

Thinking this way, it seems appropriate to weight the inbound nodes by relative importance.

The PageRank algorithm does precisely this.

A slightly simplified presentation that captures the basic idea is as follows.

Letting

where

This is a measure of the number of inbound links, weighted by their own ranking (and normalized by

There is, however, another interpretation, and it brings us back to Markov chains.

Let

The matrix

With this definition of

Writing

Hence

Let’s think of

The value

Thus, motion from page to page is that of a web surfer who moves from one page to another by randomly clicking on one of the links on that page.

Here “random” means that each link is selected with equal probability.

Since

Your exercise is to apply this ranking algorithm to the graph pictured above and return the list of pages ordered by rank.

There is a total of 14 nodes (i.e., web pages), the first named a and the last named n.

A typical line from the file has the form

d -> h;

This should be interpreted as meaning that there exists a link from d to h.

The data for this graph is shown below, and read into a file called web_graph_data.txt when the cell is executed.

%%file web_graph_data.txt

a -> d;

a -> f;

b -> j;

b -> k;

b -> m;

c -> c;

c -> g;

c -> j;

c -> m;

d -> f;

d -> h;

d -> k;

e -> d;

e -> h;

e -> l;

f -> a;

f -> b;

f -> j;

f -> l;

g -> b;

g -> j;

h -> d;

h -> g;

h -> l;

h -> m;

i -> g;

i -> h;

i -> n;

j -> e;

j -> i;

j -> k;

k -> n;

l -> m;

m -> g;

n -> c;

n -> j;

n -> m;

Overwriting web_graph_data.txt

To parse this file and extract the relevant information, you can use regular expressions.

The following code snippet provides a hint as to how you can go about this

import re

re.findall(r'\w', 'x +++ y ****** z') # \w matches alphanumerics

['x', 'y', 'z']

re.findall(r'\w', 'a ^^ b &&& $$ c')

['a', 'b', 'c']

When you solve for the ranking, you will find that the highest ranked node is in fact g, while the lowest is a.

Solution to Exercise 30.2

Here is one solution:

"""

Return list of pages, ordered by rank

"""

import re

from operator import itemgetter

infile = 'web_graph_data.txt'

alphabet = 'abcdefghijklmnopqrstuvwxyz'

n = 14 # Total number of web pages (nodes)

# Create a matrix Q indicating existence of links

# * Q[i, j] = 1 if there is a link from i to j

# * Q[i, j] = 0 otherwise

Q = np.zeros((n, n), dtype=int)

with open(infile) as f:

edges = f.readlines()

for edge in edges:

from_node, to_node = re.findall('\w', edge)

i, j = alphabet.index(from_node), alphabet.index(to_node)

Q[i, j] = 1

# Create the corresponding Markov matrix P

P = np.empty((n, n))

for i in range(n):

P[i, :] = Q[i, :] / Q[i, :].sum()

mc = MarkovChain(P)

# Compute the stationary distribution r

r = mc.stationary_distributions[0]

ranked_pages = {alphabet[i] : r[i] for i in range(n)}

# Print solution, sorted from highest to lowest rank

print('Rankings\n ***')

for name, rank in sorted(ranked_pages.items(), key=itemgetter(1), reverse=1):

print(f'{name}: {rank:.4}')

Rankings

***

g: 0.1607

j: 0.1594

m: 0.1195

n: 0.1088

k: 0.09106

b: 0.08326

e: 0.05312

i: 0.05312

c: 0.04834

h: 0.0456

l: 0.03202

d: 0.03056

f: 0.01164

a: 0.002911

<>:19: SyntaxWarning: invalid escape sequence '\w'

<>:19: SyntaxWarning: invalid escape sequence '\w'

/tmp/ipykernel_6077/251391331.py:19: SyntaxWarning: invalid escape sequence '\w'

from_node, to_node = re.findall('\w', edge)

Exercise 30.3

In numerical work, it is sometimes convenient to replace a continuous model with a discrete one.

In particular, Markov chains are routinely generated as discrete approximations to AR(1) processes of the form

Here

The variance of the stationary probability distribution of

Tauchen’s method [Tauchen, 1986] is the most common method for approximating this continuous state process with a finite state Markov chain.

A routine for this already exists in QuantEcon.py but let’s write our own version as an exercise.

As a first step, we choose

Next, we create a state space

Let

The values

If

If

Otherwise, set

The exercise is to write a function approx_markov(rho, sigma_u, m=3, n=7) that returns

Even better, write a function that returns an instance of QuantEcon.py’s MarkovChain class.

Solution to Exercise 30.3

A solution from the QuantEcon.py library can be found here.

- 1

Hint: First show that if